|

|

Blog

Helicopters (2010/finals) writeup

| heli by Mate Nagy on 2010-??-?? | Tags: 2010, finals, 2010finals, writeup, simulation, PID |

Abstract: We wanted a big, interactive game for the finals, because we thought it would increase this so-called "fun factor". The idea was that we could have an intricate real-time control problem, because this area seemed to be relatively unexplored by past Challenge24 finals. It also happens that some of us work for a company that deals in helicopter simulation - this is where the topic came from.

Story of the visual

It was apparent from the start that for a 3D game, some kind of 3D visualization would be necessary. However, we didn't want to use some kind of proprietary 3D engine we would install on the contestants' computers (as it happened in more than one past finals) - because we strongly care about the open nature of this competition, ... and also because many of us involved in this year's problem set don't like programming on Windows, sorry :)

So we needed to get some kind of video that we'd generate to the contestants. First we investigated the possibility of using a server push gif (blast from the past), or mjpeg - because we thought these would be the most portable and easy to use. It turns out, Internet Explorer still refuses to support either of these, so this idea was dropped.

So we needed to do video streaming. We didn't want to have 30 computers to generate 30 separate video streams, and I thought that running multiple (10+) OpenGL windows on one computer (preferably in headless mode) would be impossible. In retrospect, I didn't check this, so maybe it would've been possible to run lots of OpenGL windows, take the data with glReadPixels and encode that (would've saved a bit of work). Anyhow, we thought we needed a software renderer.

I checked a number of software renderers that I could find. They were either way too complicated or very buggy; mostly both (say hello to Crystal Space). The most promising one I found was this one: Renderer 2.x - funnily, it popped up on Reddit a while after I first looked at it. However, this one was still quite complex, used a lot of C++ (of which we're paranoid), and seemed to be optimized for getting as many FPS as possible from a single computer, by using all cores with sophisticated parallel programming. We needed the opposite: sustaining a low FPS with as little CPU use as possible. It looked like it was necessary to write our own software renderer.

A while ago I had written Animator , a tool that we used a lot in the competition (e.g. for the polar tanks visual and the helicopter map) - it seemed to be a good idea to go with a similar design: read from STDIN, render, dump data. So I sat down and wrote this tool. It's called Projector. (I'd like to release it later on, but can't right now - it's utterly undocumented and somewhat buggy. You can get it at svn://repo.hu/projector ) Other than for the Heli visual, we used it for visualizing solutions to the Ants problem.

Of course, writing the software renderer was a bit harder than I expected. Rendering triangles so there are no 1-pixel holes and artifacts between the polys of a big mesh full of small, thin or plain old nasty triangles turned out to be quite difficult to get right. (We had some problems with the Triangles task in the EC too, so in the current organizer team "drawing triangles is hard" is kind of a catch phrase.)

After drawing the scenery, we still had to get the image to the contestants. I explored the available open source video streaming solutions - and of course, everything had problems. Encoding real-time generated raw data (rather than pre-edited video files or V4L streams) was the smaller one, but it did exclude some solutions. Lag, compatibility, and "ease of use" were the real killers.

In the end, ffmpeg seemed to be the best choice. We used the 0.5.1 stable release. Using ffserver as a streaming server/multiplexer worked, kinda-sorta. There was some lag, and getting the whole thing to work was rather complicated. After some experimentation, pushing a Flash video directly to a web browser looked to be the nicest setup. Since we planned to give one stream to one team, and the teams couldn't look at each other's streams anyway, we could make it without using ffserver at all: connecting to the video starts the software renderer, encodes the result with ffmpeg, and gives it to the browser right away.

Actually, we tested the streams with mplayer and VLC as well. Both worked, but with horrific lag, while the web version had very little lag (especially with Firefox).

Story of the terrain

A random-generated hilly terrain seemed to be a cool setting for a helicopter game (anyone remember the old DOS Comanche game? - although that used voxels). I looked for an existing, sophisticated terrain generator, and ended up using L3DT . It proved to be a nice tool, and I happen to like the output I got out of it :) The map data generated by L3DT was a height map (16384*16384*4 bytes of a float[] array, in a single binary file), and a single texture (16834x16384 image) that had the lighting baked in. Generating this map took L3DT about a full day - although I had to experiment and fiddle with the options to get this result, so the total map design time was about a week.

Triangulating such a terrain is a common problem in these applications. To give decent detail, you need a lot of triangles; but then, to make enough scenery visible, you need a lot more of those lot of triangles, and the numbers explode. Because of the software rendering, the options were very limited: few triangles, and very little texture mapping. Originally, I didn't even write a texture mapper. The entire terrain used simple vertex-shaded triangles, only some models added later (such as the helipads, and the church object) used textures.

There are some nice solutions to the LOD (level of detail) problem, with a good bunch of available literature. These commonly depend on dividing and merging individual terrain triangles in run time. Also, the triangles are usually axis-aligned, independent of the terrain details represented by the triangles; these methods rely on texture mapping to display enough detail on far-away terrain made of few triangles.

I thought I could achieve better results if I distributed the triangle points not evenly, but rather according to the represented terrain. I did this by taking the second derivative of the terrain height as a probability function (in plain words: change in terrain slope gives a higher value). I distributed random points over the map using this probability function. The result looked like this:

Terrain points (4096x4096 PNG, 10 megs)

This approach turned out to be adequate, although it tended to generate too many or too few points in some places (too many on ragged mountainsides - this is why the mountain walls around the desert look so "detailed"). The point distribution mechanism could be refined a bit, for example by culling points that lie close to a 3D line between two neighboring points.

I generated the same point map with three different point densities, for three detail levels. 512x512 block edges are visible on the image above (in the form of slightly more visible points): I split the map along these edges, using the same points on the edges for all detail levels. This way, displaying two different detail blocks next to each other doesn't produce glitches on the common edge. The resulting blocks could have different levels of detail, and invisible blocks could be ignored altogether (using a quadtree and frustum culling).

I planned to do a Delaunay triangulation of these points to get the triangles of the terrain; invididual triangulations of each 512x512 block, for each of the three detail levels. I used Qhull to do this.

Next step was coloring the points, according to the texture generated by L3DT. Just taking the texture pixel under the terrain point didn't give terribly good results, especially where triangles where scarce. Finally, I rendered the 2D projection of the map triangles (ignoring Z/height) "onto the texture", sampled the texels under the resulting triangle pixels, and distributed the texel color to an accumulated average color of the vertices of the triangle, according to their distance from the pixel. This way I hoped to get a set of vertex colors that, being rendered as shaded triangles, made a good representation of the texture under the triangle. (Incidentally, this 2D triangle rendering code used the EC Triangles task evaluator as a base, and had its own share of "drawing triangles is hard" problems.)

However, this fails on the edges of blocks - the individually colored blocks won't have common colors on edge points. My original solution was to triangulate the entire point set at once for coloring, then use the colors at the edge points for the block edges (that have the same points for whatever level of detail). This worked fine on the small 512x512 test map I generated for development. On the real map, Qhull's memory and CPU use seemed to run away; a day and the 8G of RAM in my computer wasn't enough to triangulate the millions of points in the real point set (I stopped the attempts after another 8G of swap was eaten). Notably, Qhull also seems to have a hard limit of 16M (24 bit) vertices and edges - these limits were violated by the original point set with extreme prejudice (but the memory wasn't enough even when the number of points was reduced below 16M).

This problem was solved by taking all points on edges, then all triangles of all the highest LOD terrain blocks that contained any of these edge points; this whole point+triangle set then was used as input for the colorizer, and the output colors on the edges were used for colors on the edges of individually colored terrain blocks.

Populating the terrain with objects was a simpler business. I wrote an interactive "level editor" in AWK, using Animator for 2D map display, Projector for 3D preview, and a small C program for querying terrain height at a specific X/Y; this all was connected using Plumb . I googled the web for "free 3D models", and converted a few objects from the resulting flood of horror (although I modelled the cow myself in Blender). I wonder how many of the "easter eggs" did the teams find; there were multiple churches with cows or skeletons in the belltower, a Planet Of The Apes statue of liberty stuck out from some beach, among other miscellaneous displays. Taking only two days to decorate 16x16 km of terrain results in cheap thrills :P

I believe the single piece of real, good 3D modeling in the whole game was the helicopter model, which was made by a graphics designer colleague of mine. I offer my thanks to him here for his contribution.

The whole data set in the end contained 455 placed objects (including 287 helipads), and 27.7M triangles in the terrain. This makes a roughly 1.7G text file in Projector's input language. Of course, parsing this takes a time measurable in minutes, which is way too long a time when launching a video stream. When parsed and loaded, it also occupies a quite significant chunk of memory (about 3G). Allocating that memory for each video stream was obviously not feasible. I wrote a command in Projector that parsed the input, but without drawing or rendering anything, dumped the binary representation of parsed objects on STDOUT; this file could be then mmapped read-only with a different command. This way, all instances of the 3D renderer use the same terrain data set in shared memory. The amount of data is still rather a lot, so the Heli visual system runs only on 64-bit Linux, and needs at least 4G of RAM.

Depending on the surrounding terrain, Projector used about 6-15% of available CPU for each 320x240 video stream (on the relatively recent desktop PCs we used for servers). ffmpeg's CPU use (for the video encoding) was negligible. We used three quad-core PCs with 8G RAM as our "render farm" (two of these PCs also ran the three projectors in the main hall). Total CPU usage on these boxes during the contest stayed around a comfortable 25% (almost all teams were viewing video streams, especially in the second 12 hours).

Story of the simulation

Our idea was to use an already available 3D rigid body physics simulation as the base of the game, namely the Open Dynamics Engine , or ODE.

Helicopters were modelled as simple boxes. A more math-savvy organizer got himself into helicopter flight dynamics literature, and wrote up a couple of formulas that modelled the flight forces on the helicopter's box body. I handled the map objects (helipads and so on) in ODE as cylinders. Originally, the main rotor disc was also modelled as a cylinder, but later I found out that ODE didn't handle cylinder-cylinder collisions; so in the final game the collision volume of the rotor disc was actually a thin box.

At first, I planned to use a low-detail triangle mesh for colliding with the terrain, but luckily ODE has built-in support for height fields. There was some trouble with this; especially in the Edge Mountains, which I have drawn in L3DT by hand (basically saying "make this part reeeeally high" in the low-resolution "design map"). L3DT couldn't deal with the sudden change of height parameters very well, and the inside of the Edge Mountain area is very variable in height. This resulted in a massive amount of triangles in the terrain engine, but ODE didn't like it very much either. When helicopters collided with the Edge Mountain, ODE would often crash with an assertion error, because NaNs popped up in the results of the simulation step.

It was a long fight to avoid these errors. In the end, we used a hand-compiled ODE on the simulation server, with --disable-asserts, and a bunch of extra code in the Heli simulator that checks for NaNs and terminates the offending helicopters, resetting them to nearby helipads in a neutral state. Luckily, these problems occur with nasty collisions only (like rolling down the side of Edge Mountain), so these helicopters would be doomed to a crash anyway.

This issue came up during network testing, when I ran 90 AI instances from a single PC over a LAN to the simulator PC. Everything went fine for a while, but then the AIs ran out of memory (since they stored all map sectors they received from the simulator). The constant swapping made the AI controllers lose cycles, so all 90 helicopters started crashing into their surroundings in a loop. This exposed the physics stability problems pretty quickly...

The architecture of the helicopter game looks like this:

The simulation PC runs three programs: "sim" - the simulation server, "ctrl" - the control server, and "vis" - the visual server. All three use a common shared memory block. The simserver uses SIGUSR1 to notify ctrl and vis of a 10hz update. When receiving the signal, both ctrl and vis copy the information they need to their own private memory. There is a single lock in the shmem, which is held by ctrl when writing a control input, and by sim when it's reading the control inputs. Ctrl and vis can be stopped/started independently of the simulation.

The simserver has no external interface other than the shmem; it maintains helicopter and team status, runs the physics in a loop, notifies ctrl and vis of cycles. It also records the team status and heli positions for one team in every cycle in a binary dumpfile (so in each 3s period, all teams get recorded). After a simulator crash, status can be restored from the dumpfile (luckily, this wasn't necessary during the contest).

The control server is a single-threaded TCP server based on libevent. It reads simulation status from shmem on SIGUSR1, and writes the update to all connected clients. Commands from clients get written to the shmem in some form; usually to a block in the shmem that holds the current flight controls for each helicopter. As mentioned before, a lock is held while updating the controls.

The visual server is a TCP server just like ctrl, but much simpler. On each SIGUSR1, it writes the visual data to all connected clients; this data is the position, orientation, and current mission target (if any) for each helicopter.

Render PCs run a relay program, whose task is to get the visual data from the visual server, and retransmit it to the visualizers. This is necessary because the visual data uses quite a bit of bandwidth, and transmitting it 30 times would be hard on the simulation PC. This way, it only needs to send it to each render PC once.

A visualizer is a pipeline that gets visual data from the relay with netcat, pipes it to an AWK that processes it into Projector instructions, piped to Projector, which is then piped to ffmpeg, which is then piped to the client's browser. The whole thing runs behind xinetd (the HTTP interface is actually a bunch of echos in shell scripts).

Story of the game

The original concept wasn't much more than "game with helicopters"; but the delivery idea came pretty soon. It's easily implementable, has clear rules, and has pretty involved strategy (theoretically, the three helicopters of a team could be organized so their delivery routes are optimal over multiple jobs). There were a lot of other ideas during brainstorming. For example, we were considering an area of the map where the terrain radar wouldn't work; instead, there would be big light towers with colored lights, and the teams would have to process the video and triangulate their own position somehow. We were also thinking about more complicated death match scenarios with weapons, blimp hunting, and so on. In the end, the flight control task itself turned out to be complex enough. Intro missions were a quite late addition to the game - we're lucky that they came up, because without them, the helicopter task would probably have been entirely ignored.

We expected that controlling the helicopter would be non-trivial. We experienced this soon after the simulation started running, and we wrote our first "manual drive" program - drive.sh (a blend of AWK + netcat + Animator + Plumb). Pitch and roll were almost impossible to control by hand, so we needed some kind of computer help right away. Height control in itself was really easy to do (as I overheard in corridor conversations, this was the general experience of contestants as well), but pitch/roll were really tricky.

This was when I learned of PD controllers from a fellow organizer. Implementing one (based on Wikipedia of all things) was really easy, added up to about 20 lines of AWK. This was the "ORIENT" mode of drive.sh: instead of passing on the pitch/roll controls directly, it tried to keep the pitch/roll close to the controls. It made the helicopter manually drivable after very little tuning of the PD parameters. Later on (before another organizer wrote our nice AI based on drive.sh), I fine-tuned the PD factors, with the help of plotting the desired values, actual values, control outputs and current error in real-time (using frtplot ).

A PD controller is enough (without an integrator term) if we have a good model and know the external forces. For example our height control looked like

collective = 9.81 + kp*e + kd*delta_e

Which is fine if the weight is exactly 9.81, but if the helicopter is leaning forward or we are carrying some cargo, then the collective should be greater than 9.81 at the target position. So in those cases our drive.sh results equilibrium a bit below the target height. This error can be fixed by a small integrator term (which integrates the error over time and sets the force based on that) or by having better knowledge of the model (measuring the carried height and calculating roll and pitch angles) and modifying the collective force based on that. However in practice it turned out the PD controller gives enough precision in the simulation and is easier to use (only two tunable parameters instead of three) so we only wrote about the PD terms in the Appendix.

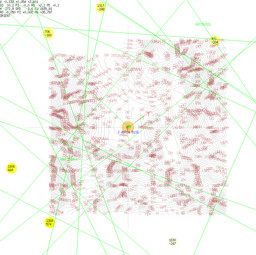

A screenshot of drive.sh in action; the green lines are delivery jobs, yellow blobs are radar blips. The blue "J" line is the ID and distance of a job. The green arrow is the desired orientation of the helicopter, the red arrow is the current orientation, the (barely visible, at the center) black arrow is the current velocity. The transparent orange circle can be moved to adjust the cyclic stick (or, in ORIENT mode, to give the desired pitch/roll).

The AI used two set of controllers: one set the height, roll, pitch and yaw at desired positions, and another set the desired positions based on the target helipad and current terrain (so it used cascade controllers).

The first and some of the second set of controllers were PD controllers with saturated command outputs (for example the desired pitch was in the [-30,30] degree interval).

A simple logic managed the second set of controllers: first it set a target z (current height + 150) then when it was reached set the desired yaw angle to the direction of the target, finally it set the pitch angle using a PD controller based on the distance from the target. (So the pitch was constant 30 degree while we were far from the target. The height was kept at the maximum of the current z position and highest terrain point infront of the heli +150).

When the helicopter got close to the target the control logic changed: yaw angle was not controlled at all, roll and pitch angle was set according to the forward and sideway distance from the target.

When the target was reached and velocity was small the helicopter landed by setting the target z position to the height of the helipad - 10.

To do deliveries there was some other simple logics like checking the fuel: when we only got 800 fuel but still a great distance until the target, the current target was saved and the target was set to the closest helipad to the position 1km in front of the helicopter (this required all helipad coordinates from job requests, looking forward was useful because this way the helicopter did not try to turn around while moving 25m/s).

When taking deliveries our AI filtered the jobs based on a simple estimation to see if we could deliver the job in time, then tried to choose the closest available job.

This simple AI could get a fair amount of points in four hours. The top reasons of failure were crashes into mountains and out of fuel, both caused by the above 1000m high mountains in the middle of the terrain. When only a few AIs were flying then the passive helicopters parking on the helipads also caused some crashes (so we added some extra logic to land slowly and frag those helis).

A better AI would have used offline calculated routes based on accumulated terrain data and helipad coordinates. Mountain avoidance could be done in a better way than just moving upwards. Choosing the right job mattered a lot. Turning maneuvers can take advantage of rolling, not just yaw. So there are many places for improvement.

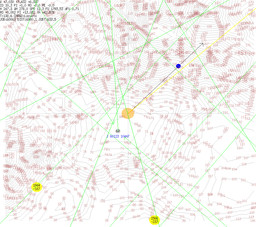

AI after taking on a delivery (blue thing marks the sector where the terrain height is checked, yellow arrow points to the target, there are many useful infos in the upper left corner, WH is the wanted height, JOBT is the remaining time from the job and so on)

Networking

This game has rather strong network bandwidth and latency requirements. This was expected from the start, and this is why the 10 Hz update rate was chosen instead of something higher (10 Hz is probably the lowest that we could get away with and still provide a sensation of motion rather than a slide show; it also affects the accuracy of the controllers connected to the system).

It is very important that the update packets, however large they are, get to the client within 0.1s, so that the client may have a chance to respond and update its controllers. There was some effort spent on making the updates slightly smaller (e.g. this is why only one map sector is sent in one cycle, in that noticeable spiral order). There was quite a lot of testing done over various network mediums (LAN and WAN; even centralised OpenVPN).

Our experience was generally good, but I found a single PC on which the AIs always crashed themselves. The reason was that the packets on that PC got "bunched up" in about groups of three that were received all at once. Other PCs on the same LAN worked just fine (even over the internet), and the problem came up from the single PC even when it connected to a simserver running on another PC of the same LAN; this made me think of the problem as a NIC or configuration issue.

Unfortunately, the same problem occurred for a single team during the contest. Our network guy tried to investigate the issue, and found some peculiar things - finally we decided that the reason is probably the fantastically intricate network setup of this particular team (also, they used a MAC address that they never registered with us, and this made a lot of packets bounce around our network uselessly - or so I understand from the account of our network guy). However, it would be a good idea to check what effect setting TCP_NODELAY on the server side would have on this - it might provide a solution and thus would've saved some trouble for us and for this one team.

The other problem we had during the contest was with a Windows-based team, who complained that they couldn't log in to the server again after they'd killed their AI from the Visual Studio Debugger (the control server complained that the "helicopter is controlled already"). Apparently, Windows doesn't send a TCP FIN packet for the open sockets a program has, if that program is killed from the debugger. Our Linux PC kept the socket open, but the Windows kernel has totally forgotten about the socket. This caused some trouble, since we didn't have a way of closing control connections. Finally, I tried to GDB into ctrl (which is tricky, it being a running process with lots of real-time duties), and succeeded in only killing it off (closing the sockets in the process)... Fortunately, this didn't affect the simulation, ctrl was restarted easily enough, and nothing worse came from this than some inconvenience for the teams. The lesson we got from this is that we should have a way of kicking connections (and maybe some kind of timeout or PING mechanism).

Bonus

~22 hours of heli activity plotted onto the map, condensed into a 7 min video - each frame is 3s real time (33 megs, 1024x1024 60 fps h.264 avi)